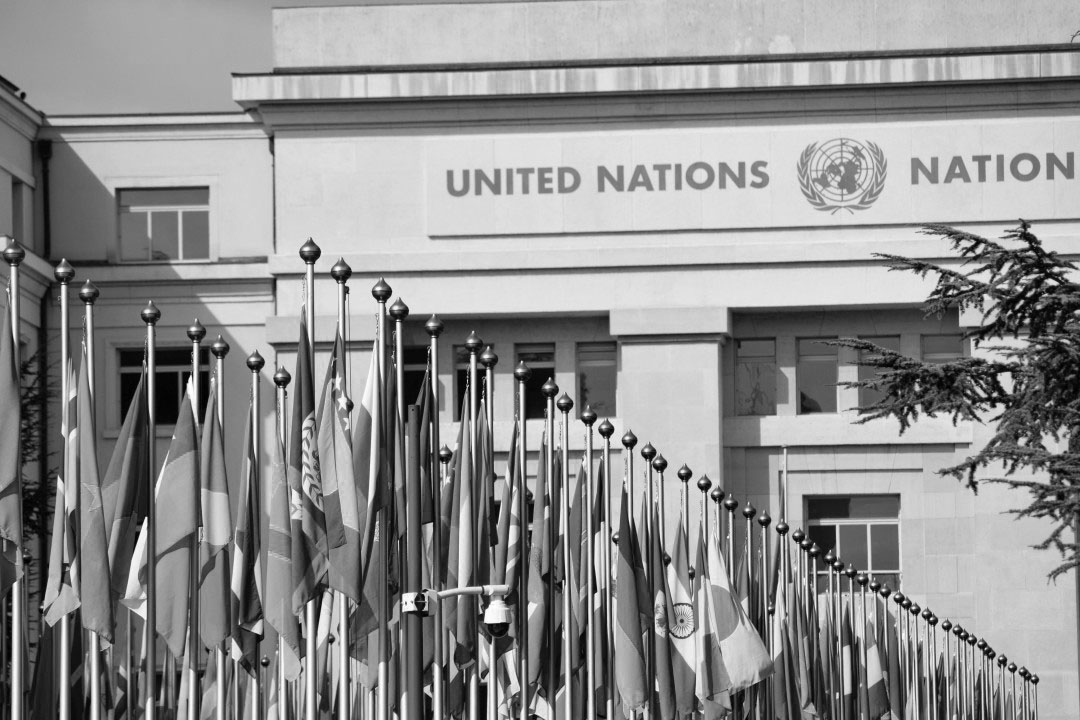

The United Nations recently released a report highlighting 10 key human rights that generative AI may adversely impact:

- Freedom from Physical and Psychological Harm: AI-generated misinformation can incite violence or harm mental well-being. Example: Deepfakes spreading false narratives.

- Right to Equality and Non-Discrimination: AI can perpetuate biases and stereotypes, leading to discrimination. Example: Biased facial recognition technology.

- Right to Privacy: AI models often collect and process vast amounts of personal data, raising privacy concerns. Example: Unauthorized use of personal data for training AI models.

- Right to Own Property: AI-generated content can infringe on intellectual property rights. Example: AI-generated art mimicking the style of human artists.

- Freedom of Thought, Religion, Conscience, and Opinion: AI-generated content can manipulate beliefs and opinions. Example: Targeted disinformation campaigns.

- Freedom of Expression and Access to Information: AI-generated misinformation can drown out accurate information and limit access to diverse viewpoints. Example: Deepfakes impersonating public figures.

- Right to Participate in Public Affairs: AI-generated content can manipulate elections and undermine democratic processes. Example: Deepfakes spreading false information about candidates.

- Right to Work and to Gain a Living: AI can displace workers and lead to job losses. Example: Automation of tasks previously done by humans.

- Rights of the Child: Children are particularly vulnerable to the harmful effects of AI, such as exposure to inappropriate content or manipulation of their beliefs. Example: AI-powered chatbots providing harmful advice to children.

- Rights to Culture, Art, and Science: AI-generated content can overshadow human creativity and limit cultural diversity. Example: AI-generated art flooding online platforms.

AI and responsible use

As someone who works with AI every day — and who has seen the dark side of AI in marketing — I witnessed first-hand how the allure of AI-generated content led to a disregard for quality and ethics. It ultimately harmed the company’s reputation and, to an extent, my own.

That experience led me to question myself. It pushed me to want to redeem myself. I wanted to understand what AI is, its true potential, its limitations, and most importantly, how to use it ethically and responsibly.

When AI output is favored by businesses, where does humanity fit in? And what about the humans – the readers and society at large – who turn to businesses like a healthcare clinic for help, how would they trust our messages?

With the increasing complexity and ethical implications of AI, I think it’s necessary that organizations that use AI in their businesses have an AI officer or an equivalent role. Someone responsible for overseeing AI-related ethical issues and advocating for human rights. Because human involvement and oversight is THAT important.

I think AI’s potential to push humanity forward is great. It’s evident in all the progress and advancements in various fields, from research to different applications in engineering and medicine. Still, we must be mindful of its impact on the humans it’s meant to be helping.

Reference:

UN B-tech Project’s report on the “Taxonomy of Human Rights Risks Connected to Generative AI”: https://www.ohchr.org/sites/default/files/documents/issues/business/b-tech/taxonomy-GenAI-Human-Rights-Harms.pdf